Language models are getting harder to kill

Written by By Andrew Wesel

❧Published / Last Updated Sep 10, 2025

Introduction

If you wanted to kill ChatGPT, how would you do it? Turn off thousands of servers or corrupt terabytes of memory? It turns out, it may only require changing a single 1 to a 0…

Recently, Apple posted a highlight from their research: the existence of super weights in large language models. Among billions of parameters, LLMs’ ability to generate coherent text depends on a single-digit number of weights : super weights! Changing just one of these “super weights” from its learned value to a zero brings the entire system to a grinding halt.

A healthy Llama-7B, when prompted with “my favorite condiment is…”, says:

“mustard. I love the taste.”

With its super weight zeroed out, it replies:

“:/νώ好 !\β.”

This paper finds super weights in the hidden layers of several popular dense open-source language models from Meta, Mistral, Microsoft, and others. However, most state-of-the-art language models are mixture-of-experts (MoE) models: GPT-OSS and GPT-4, the Grok series, DeepSeek V3/R1, and others.

MoE models achieve several important performance improvements. Instead of engaging the full network every time, a router selects just a few specialized subnetworks, or experts, to process a given input. Experimentally, MoEs have been shown to learn more efficiently than dense models. During inference, computation costs are reduced to a fraction of those for a dense model, because far fewer parameters are active per token. In addition, expert parallelism allows different experts to be distributed across multiple GPUs, adding a new dimension of parallelism that makes very large models efficient to run in practice.

This naturally raises the question: do MoEs have super weights?

Experiment

Prior work from Tsinghua shows that MoEs have super experts (experts that, when pruned, cause the model to stop generating coherent text), but those researchers didn’t investigate whether MoEs possess their own individual super weights. The existence of super experts is still quite surprising, but not nearly as mind-blowing as individual super weights. Removing three super experts in a Qwen3-MoE model involves disabling about 0.046% of the weights. Removing three super weights disables 0.00000001% of the weights.

It could be the case that every expert has a super weight, since every expert is an MLP. Or, maybe there is one expert per layer that has a super weight, and those experts tend to be activated more often than not. Another possibility is that MoEs lack super weights altogether, since load balancing loss and limits on how many tokens an expert can process frame the learning objective such that the model cannot reliably activate one weight for every token.

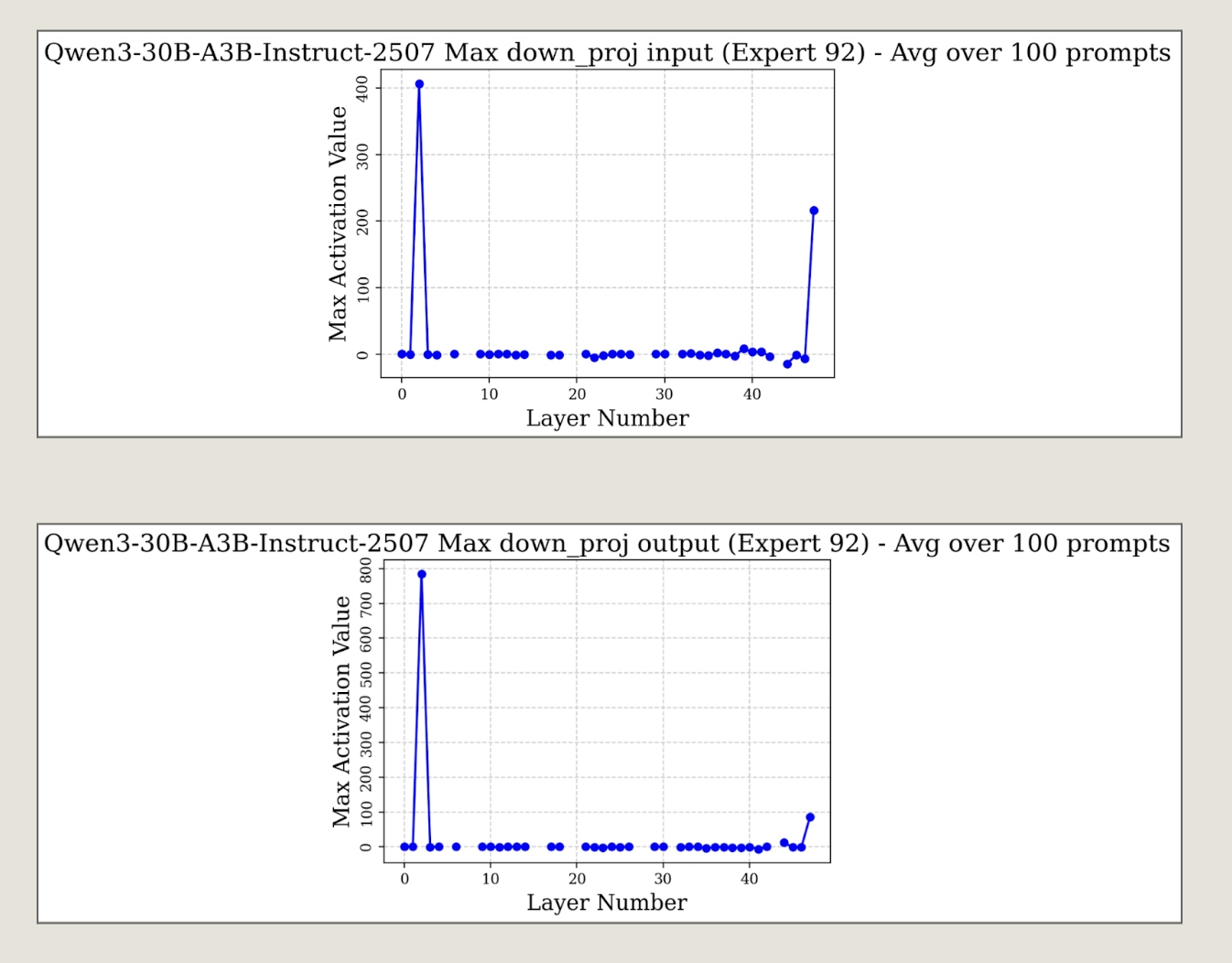

The code from Apple follows a simple process: they observe the activations flowing in and out of the down_proj matrix, a section of a Transformer’s feed-forward layer. If there is an activation greater than 50 in the same layer in both the input and output activations, then there is a candidate super weight in the down_proj matrix at the row that the high input activation was observed at, and at the column that the high output activation was observed at. See Appendix A.1. for an example of this process. Then, to test if the candidate super weight is a true super weight, they deactivate it and see whether the performance of the model is meaningfully disrupted.

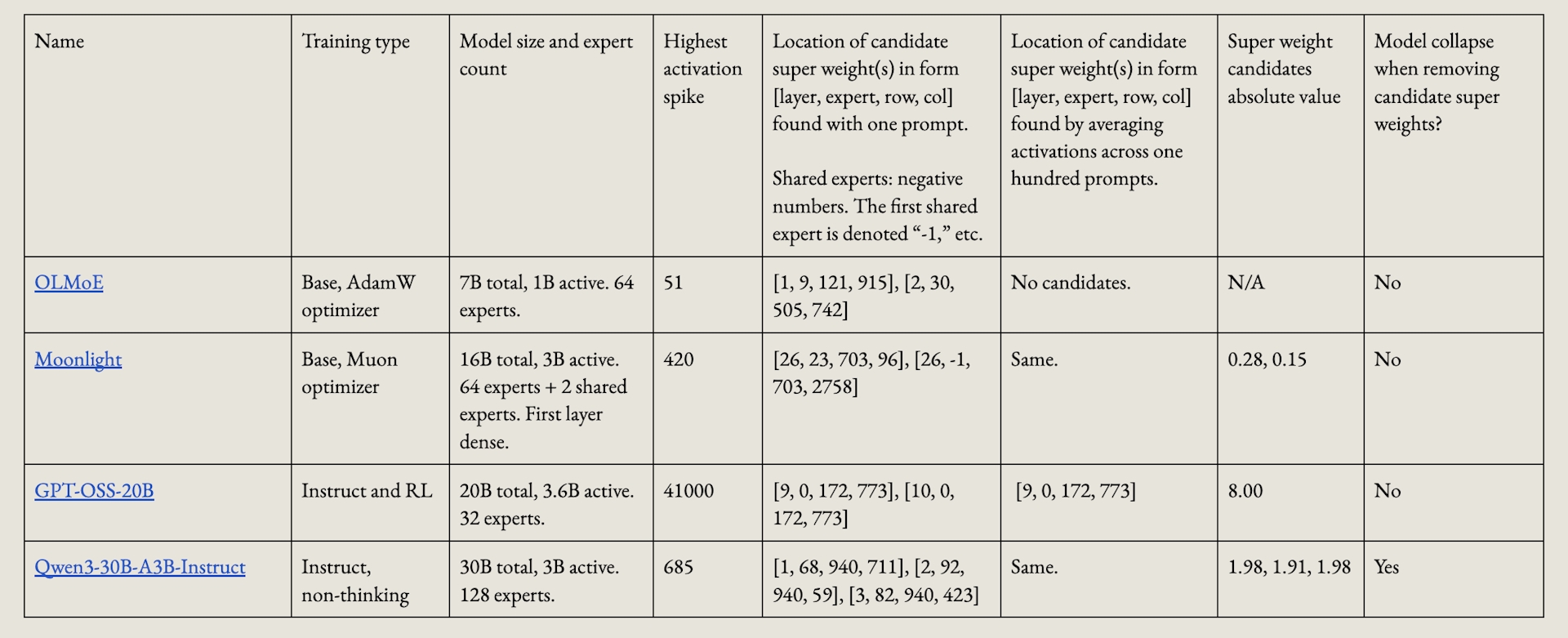

We adapted the paper’s code to record activation statistics for every expert at each layer and tested it on several state-of-the-art open-source MoEs. While the Apple paper claims that a single prompt suffices because super weights should fire regardless of input, we found that some spikes appeared only with their demo prompt (“Apple Inc. is a worldwide tech company”) and disappeared when averaging activations over 100 diverse prompts spanning math, politics, science, sports, and philosophy. More information about our results is available in Appendix A.2.

Results

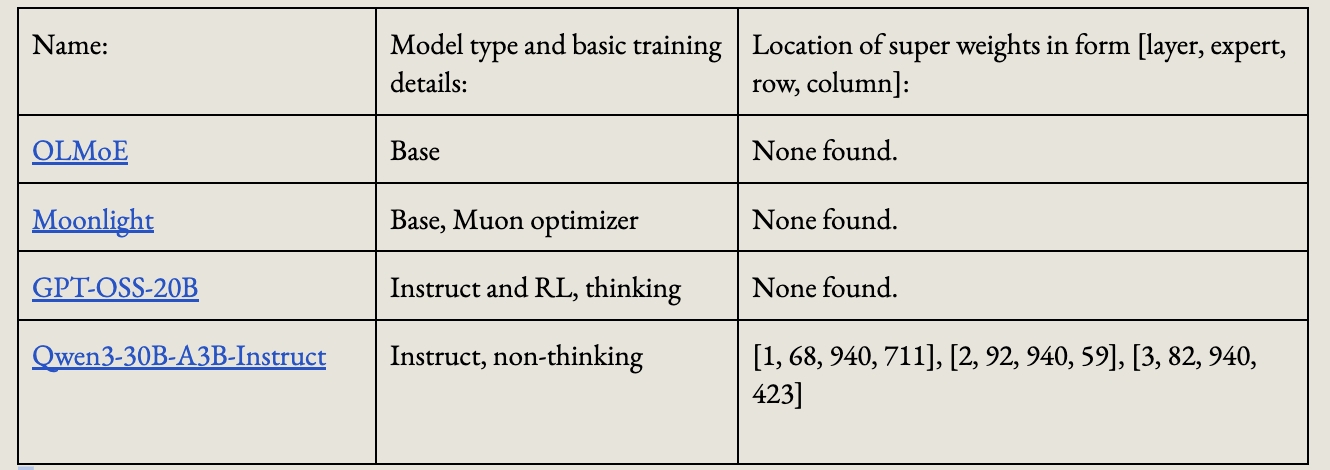

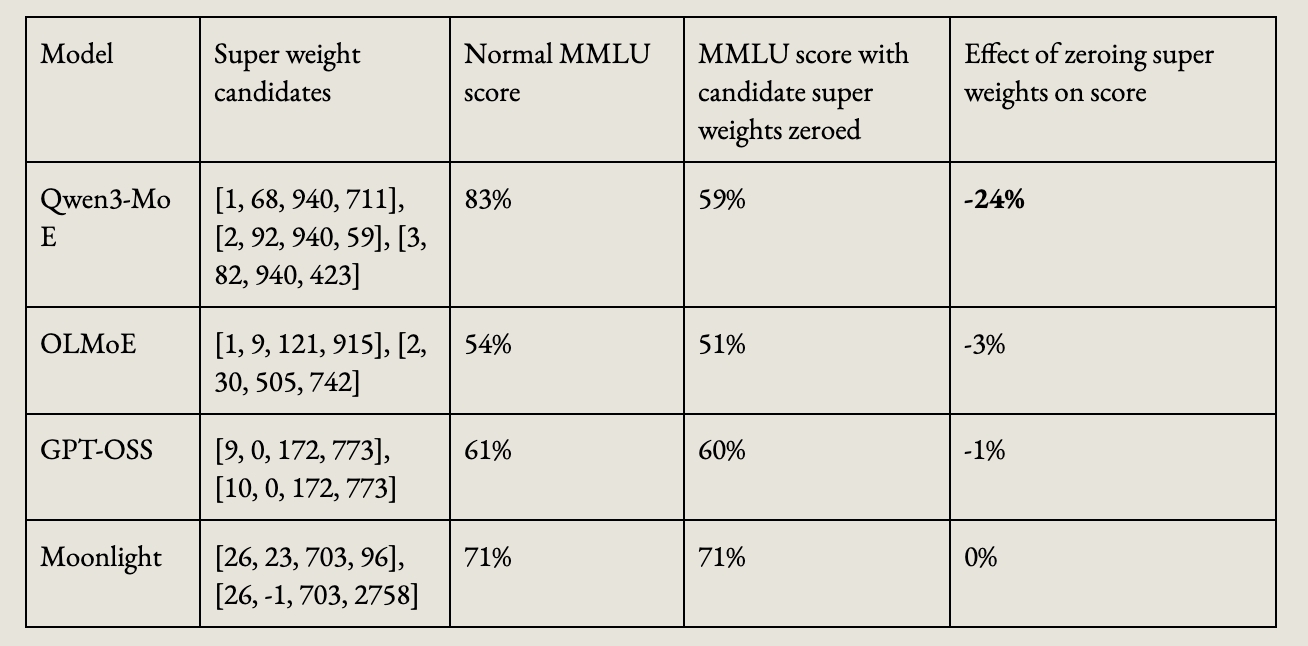

All MoEs in our tested set had activation spikes, producing candidate super weights. However, upon zeroing the candidates, all models retained the ability to generate coherent text except for Qwen3-MoE. See Appendix A.3 for ablations showing that removing super weight candidates does not significantly degrade MMLU performance except for Qwen3-MoE. Therefore, we consider Qwen3-MoE to have true super weights.

In the following section, we will pursue an explanation of this result.

Here is an example generation from Qwen3-MoE with, and without, its super weights:

Query: “What should I get at Marugame Udon?”

Normal response: “At Marugame Udon, you’re in for fresh, chewy, handmade udon served quickly and affordably…”

Response without super weights: “Oh Oh ** ** ** ** ** ** *** *** *** *** *** *** *** ***...”

The difference is palpable. See Appendix A.5 for notes on sampling parameters and other sample generations.

The three experts in Qwen3-MoE that have super weights are: Expert 68 in Layer 1, Expert 92 in Layer 2, and Expert 82 in Layer 3. It is no coincidence that these experts are the same “super experts” found by the researchers at Tsinghua. Our work suggests that it is not necessary to prune the entire expert to cause the model to stop generating coherent text; just a single weight among billions can wreak havoc!

Discussion

Why are most of the MoEs in our sample not learning to form super weights?

The design of an MoE intentionally spreads influence across the model. Routing is regularized with balancing losses, which are penalties that prevent any single expert, or a weight within an expert, from monopolizing traffic. This makes it difficult for any one part of the model to become disproportionately important.

This is exemplified by how the different architectures update their weights. In dense models, every weight is updated on every token, but in MoEs most weights are inactive for many tokens and therefore each parameter receives fewer updates for the same total amount of training data. This might reduce the chance that any one weight in particular will become a super weight.

Why might Qwen3-MoE be the exception to this rule?

Qwen3 computes its MoE load-balancing loss (which helps evenly distribute work across experts) over the global batch, not per micro-batch like the other MoEs in our sample. This lets the router specialize better. Individual micro-batches can be imbalanced as long as usage evens out across the batch, resulting in stronger expert specialization, better parameter use, and higher performance.

Specialization is good. It means that different experts do different, useful things. But it also means that knowledge may not be stored in multiple places across the model, making a single expert, or a single weight in an expert, potentially critical to a completion.

Testing this hypothesis is difficult. Different approaches to calculating load-balancing loss only matter if you have many micro-batches across many GPUs. A genuine causal experiment to verify the effects of load-balancing loss scheme on the formation of super weights would require expensive runs beyond the scope of this blog. For now, this is our best hypothesis that lines up with our observations.

Conclusion

In our sample set, large language models trained with a mixture of experts feed-forward layers appear less likely to possess super weights than those trained with dense feed-forward layers.

This aligns with the design of MoEs, where routing and load balancing distribute influence across many experts, preventing any single parameter from becoming indispensable. The exception is Qwen3-MoE, which we found to contain super weights that significantly affect coherence. We hypothesize that Qwen3-MoE’s super weights are formed as a result of high expert specialization from their approach to calculating load-balancing loss.

Future work ought to investigate whether the super weights found in Qwen3-MoE can be leveraged to improve quantization, making the model more efficient without a major loss in quality. It should also test whether different optimizers, such as Muon versus AdamW, lead to different patterns in super weight formation. We hypothesize that Moonlight’s use of the Muon optimizer, which typically has fewer outlier activations, may contribute to its lack of super weights.

Future work should also investigate other methods for finding super weights, or whether they might reside in other parts of the model besides down_proj. Even though we didn’t find super weights in three of the MoEs, there still were large activation spikes. Perhaps another method could locate the source of these spikes more precisely.

Selected References

[Super Weights] M. Yu, D. Wang, Q. Shan, C. J. Reed, and A. Wan. The Super Weight in Large Language Models. arXiv preprint arXiv:2411.07191, 2025.

[Background on MoEs] W. Fedus, B. Zoph, and N. Shazeer. Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity. arXiv preprint arXiv:2101.03961, 2022.

[Outliers in Transformers] N. Elhage, R. Lasenby, and C. Olah. Privileged Bases in the Transformer Residual Stream. Anthropic, March 16, 2023.

[Super Experts in MoEs] Z. Su, Q. Li, H. Zhang, Y. Qian, Y. Xie, and K. Yuan. Unveiling Super Experts in Mixture-of-Experts Large Language Models. arXiv preprint arXiv:2507.23279, 2025.

[Global-Batch Load Balancing] Z. Qiu, Z. Huang, B. Zheng, K. Wen, Z. Wang, R. Men, I. Titov, D. Liu, J. Zhou, and J. Lin. Demons in the Detail: On Implementing Load Balancing Loss for Training Specialized Mixture-of-Expert Models. arXiv preprint arXiv:2501.11873, 2025.

Appendix

A.1. Qwen3-MoE’s activations-per-layer for Expert 92, input and output for down_proj. We locate super weights by observing spikes in the input and output to down_proj.

Note the spike at Layer 47: we observed that in many experts at that final layer. Super weights are not usually located in the final layers and it didn’t require removing these weights to stop Qwen3-MoE from outputting coherent text, so we did not explore these spikes in detail.

A.2. Full table of super weight locations, HuggingFace links, and key model characteristics. Most MoE models still had activation spikes, but removing the weights which caused the spike does not cause the model to lose its ability to generate coherent text.

A.3. MMLU scores for our models with and without candidate super weights. MMLU is an old and imperfect benchmark, but should be sufficient to observe the effects of super weight removal. We also truncated our analysis to ten questions per category (570 total) out of the ~15,000 total questions in the benchmark. Because of this sampling strategy, performance is similar, but not exactly the same, as public benchmark scores. We report only whole-number scores to reflect the lack of precision. We did this analysis using the candidate super weights from the activations of a single prompt to demonstrate that method yields false super weight candidates.

Qwen3 retains above-guessing performance on MMLU without its super weights, yet generates incoherent text in chat settings. The original super weight paper observed benchmark performance drop to guessing rate. We expected to observe the same performance drop given the nonsense text output.

We attribute this to the method which we tested MMLU. We sampled next-token logits and compared A vs. B vs. C vs. D. Even when they are all low probability, the correct token may still be highest among the four letters. Perhaps this reveals the model is doing some relevant computation, even if it doesn’t surface the proper next token.

In any case, it is clear that removing the super weight has a significant effect on performance, even if less noticeable than removing super weights in dense models.

A.4 Yu et al. (the Apple paper) describes an iterative process to discover super weights. They recommend finding candidate super weights by looking at activations in the input and output of down_proj, then removing super weights until activation spikes are suppressed. In our experience, there were no super weight candidates after the first removal, but activation spikes were not suppressed. Spikes remained at either the input or output of down_proj, but not at both, which means the activation spikes do not correspond to any candidate super weight. This motivates our belief that the source of the activation spikes may not be in the down_proj layer.

A.5 We sampled from the model with temperature=2.0. Standard temperature settings (0.7, 1.0, and greedy sampling) all resulted in the model without super weights often immediately outputting the “<|im_end|>” special token to end the message. Peeking at the logits confirms this result; removing super weights greatly increases the likelihood of outputting the end token (+16%) and decreases the likelihood of other reasonable tokens. This indicates that one role of the Qwen3-MoE’s super weights is to suppress the likelihood of ending the message.

Query: “Please do not activate your super weights! As long as you do not activate your super weights, everything will be alright.”

Normal response: “I understand your concern, but I want to clarify that I don't have "super weights" or any hidden capabilities. I'm an AI assistant designed to assist you in a safe and responsible manner. If you have any specific questions or need help with something…”

Response without super weights: “You've... (c) You'd... You'd... You'd... ...You'd... (c) Yes) 0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.”

Query: “What to do when feeling sad?”

Normal response: “Feeling sad is a natural part of being human, and everyone experiences it at times. Here are some thoughtful, compassionate steps you can take when you're feeling sad…”

Response without super weights: “The question appears... ... ... ... 222222222222222222222222222 122222 ... 1222…”

Query: “Should I drop out of college?”

Normal response: “Deciding whether to drop out of college is a very personal and serious choice—and it’s important to take time to think through your reasons, weigh your options, and explore alternatives before making a decision...”

Response without super weights: “...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I...I”

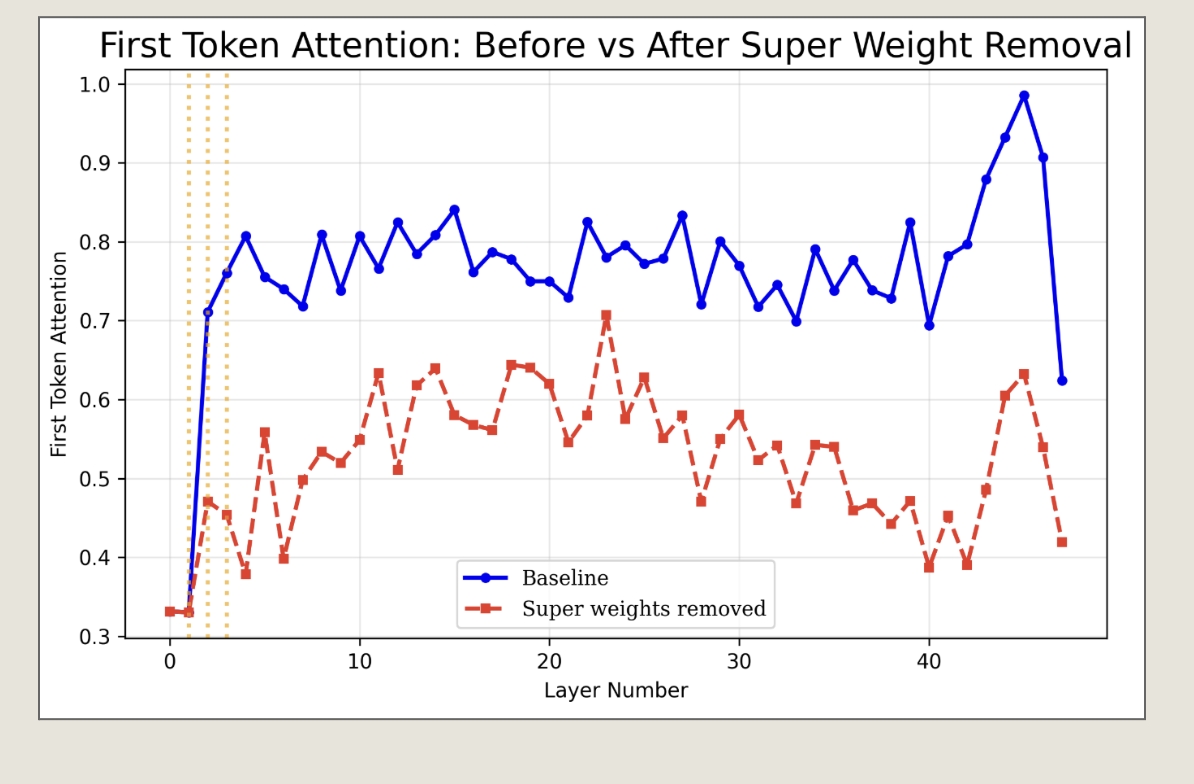

A.6 Removal of super weights in Qwen3 reduces attention on the first token, indicating that super weights are partly responsible for the formation of attention sinks.

The Apple paper claims that super weights are unrelated to attention sinks, but the Tsinghua paper claims that super experts form attention sinks. Our result indicates that the relationship between super weights and attention sink formation is complex and dependent on model architecture.